Integration from azure blob through x++

IMPORT INTEGRATION FROM AZURE BLOB

THROUGH X++

This document will contain all the steps needed to fetch file(s)

from azure blob storage account container and process the data as per

requirement.

Azure Blob Storage is Microsoft's

object storage solution for the cloud. Blob storage is optimized for storing

massive amounts of unstructured data. Blob

storage is designed for:

· Serving

images or documents directly to a browser.

· Storing

files for distributed access.

· Streaming

video and audio.

· Writing

to log files.

· Storing

data for backup and restore, disaster recovery, and archiving.

· Storing

data for analysis by an on-premises or Azure-hosted service.

Here is how to configure storage accounts:

Step# 01:

Navigate to URL: https://portal.azure.com/ and login

with credentials.

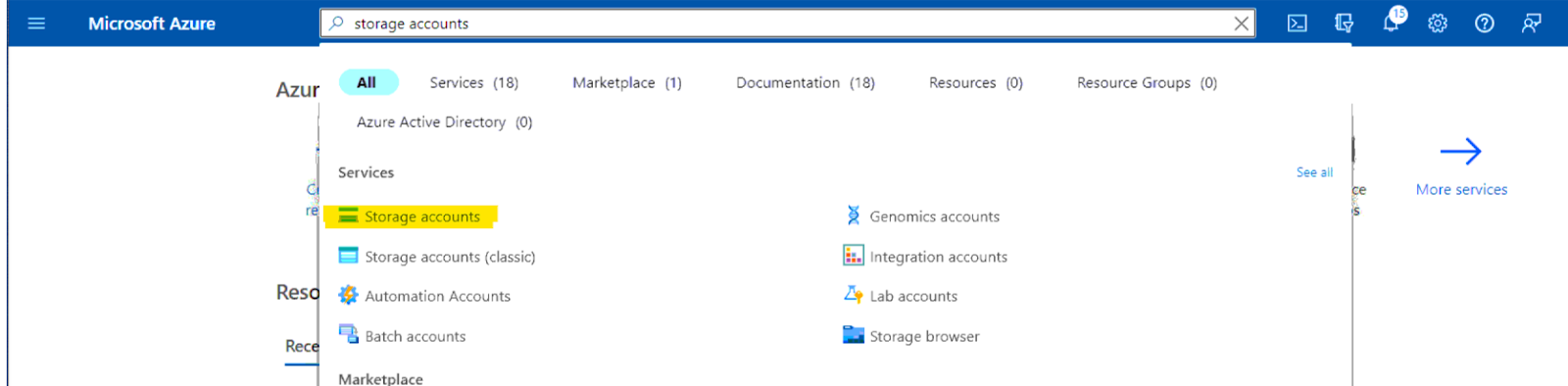

Step# 02:

Search for storage account and click on first service.

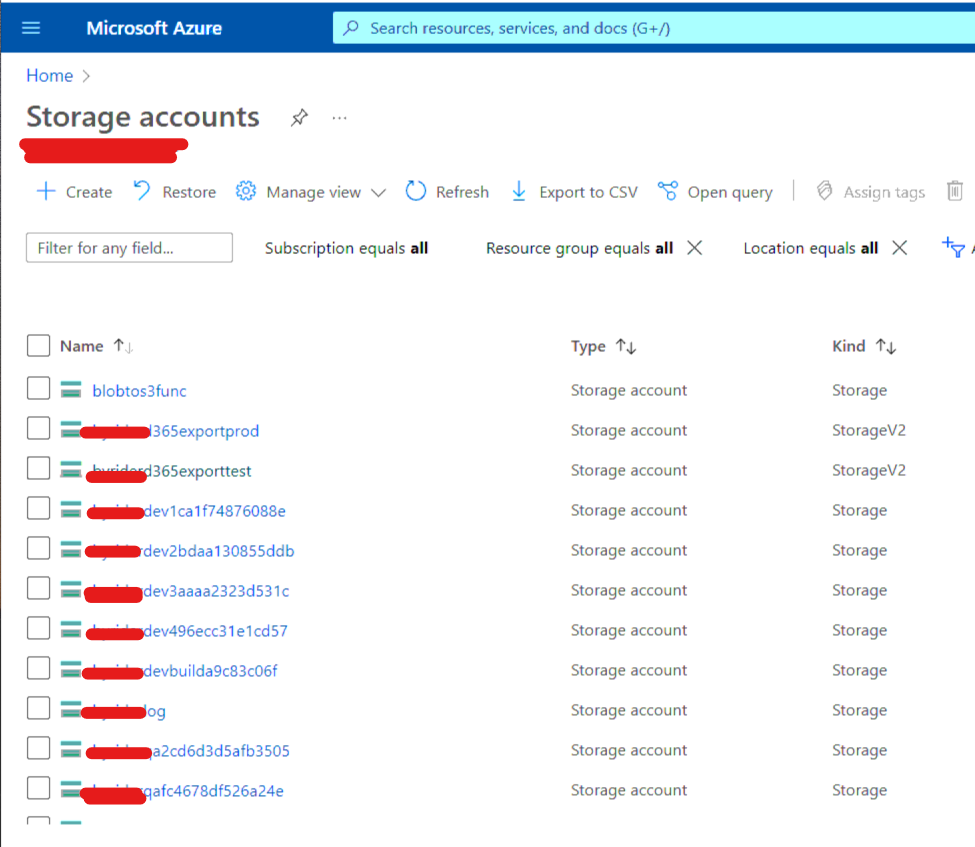

Step# 03:

The following form will be displayed. Use existing storage account

or create your own as per requirement. In this case, we are using existing

storage account.

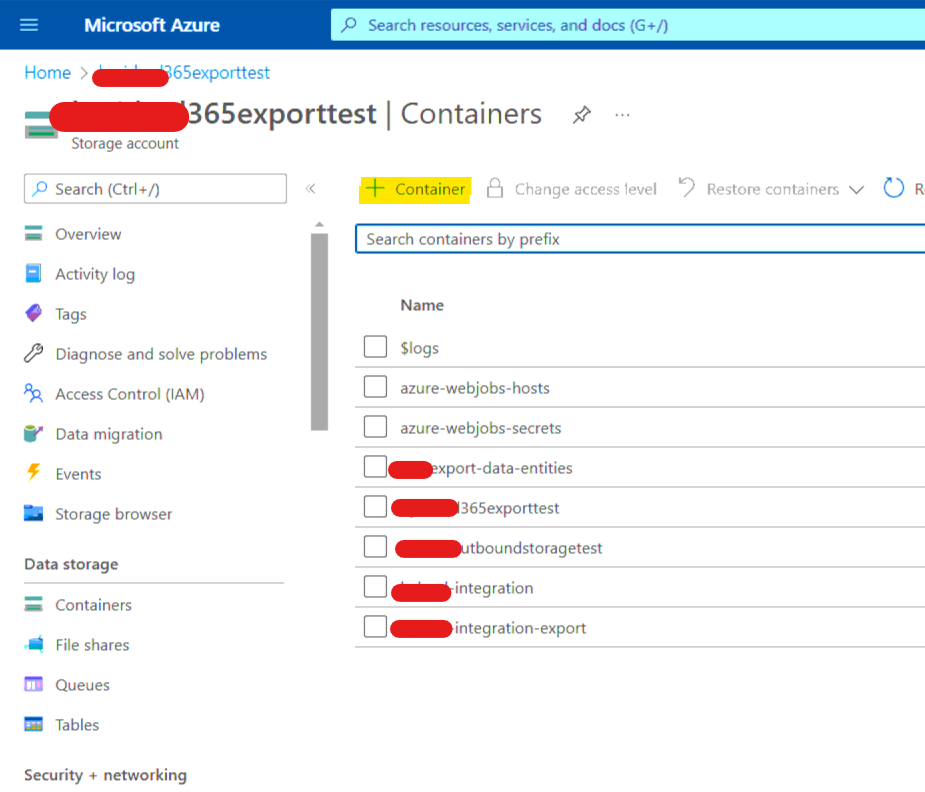

Step# 04:

In your selected storage account go Data Storage/Containers and

select your desired container or create new.

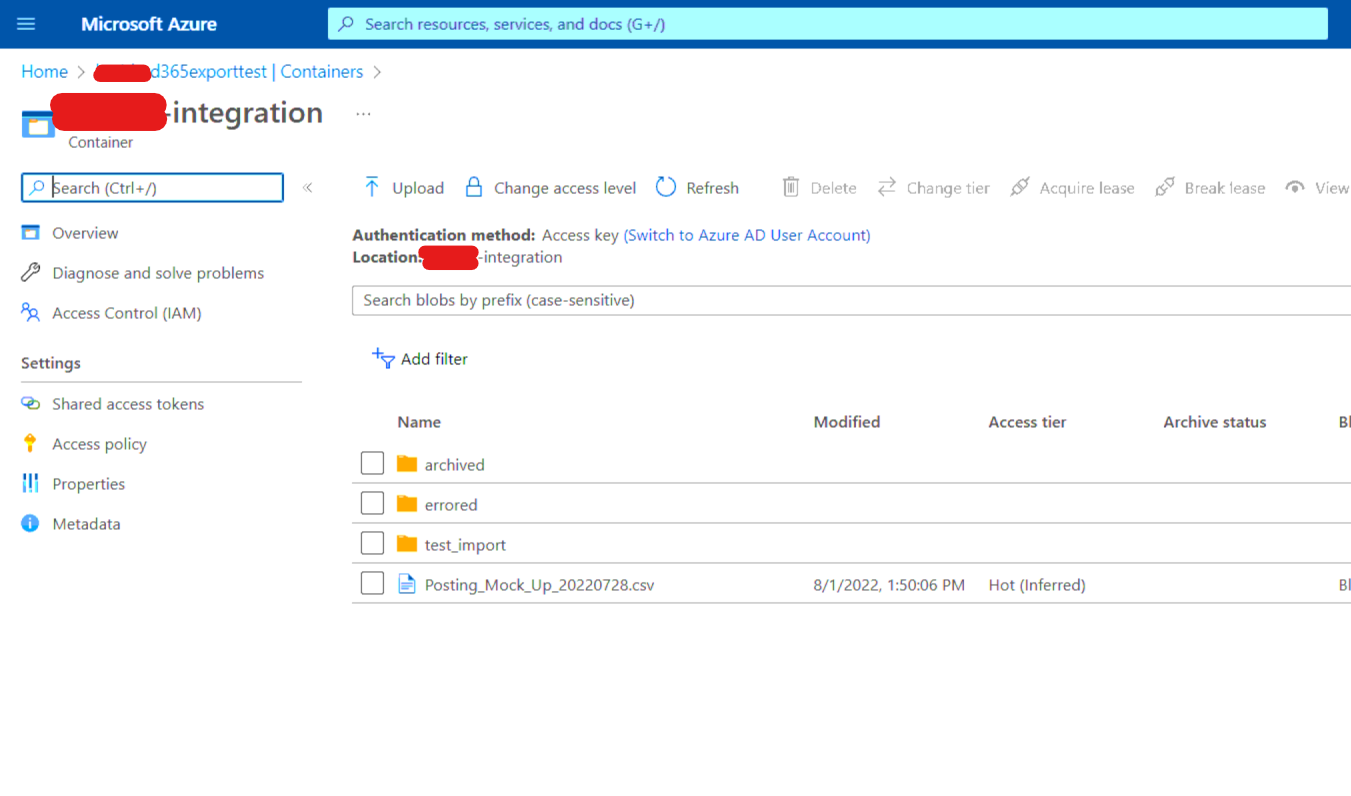

Step# 05:

In your selected container, you can upload your file(s). it can be

text, excel, png, jpeg, pdf etc file.

If you wish to create a folder then click on Upload.

Upload your desired file.

Expand Advanced tab and in Upload to folder write the name of

your folder and click upload button. The folder will be created containing the

uploaded file.

NOTE: Thing to keep in mind that if you delete all files present in any folder,

then the folder will be deleted itself.

Step# 06:

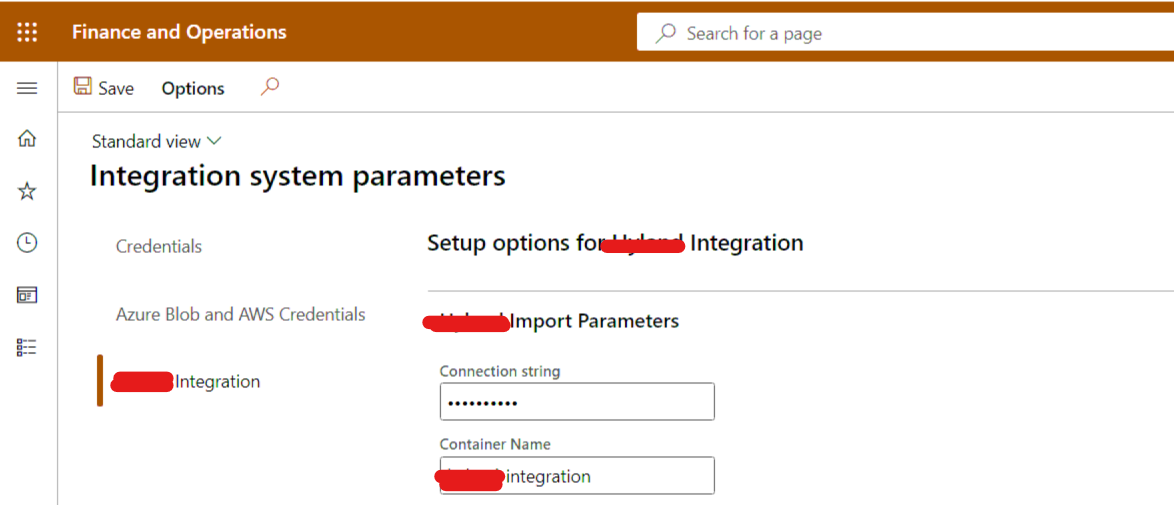

Now we need to setup some parameters in D365FO which will help in

connecting the d365 to azure storage account.

This will require a connection string and name of container that

will be used.

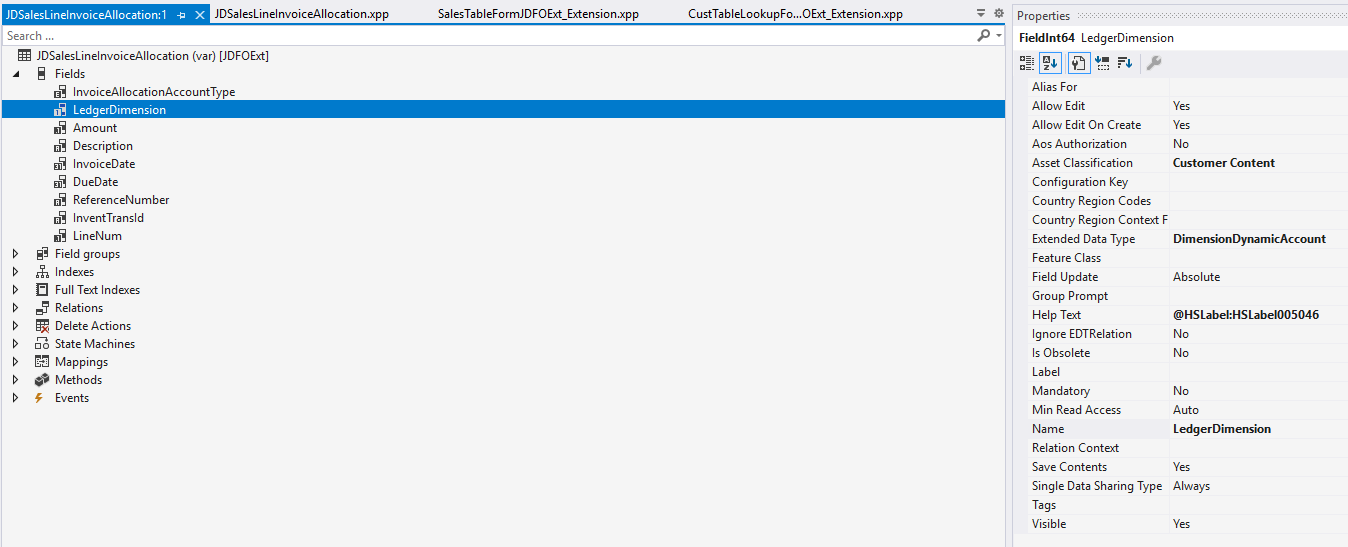

Create a new table, or use an existing table to store connection

string and container name.

Step# 07:

Create a new parameter form in d365 and expose these fields to

manually enter the values.

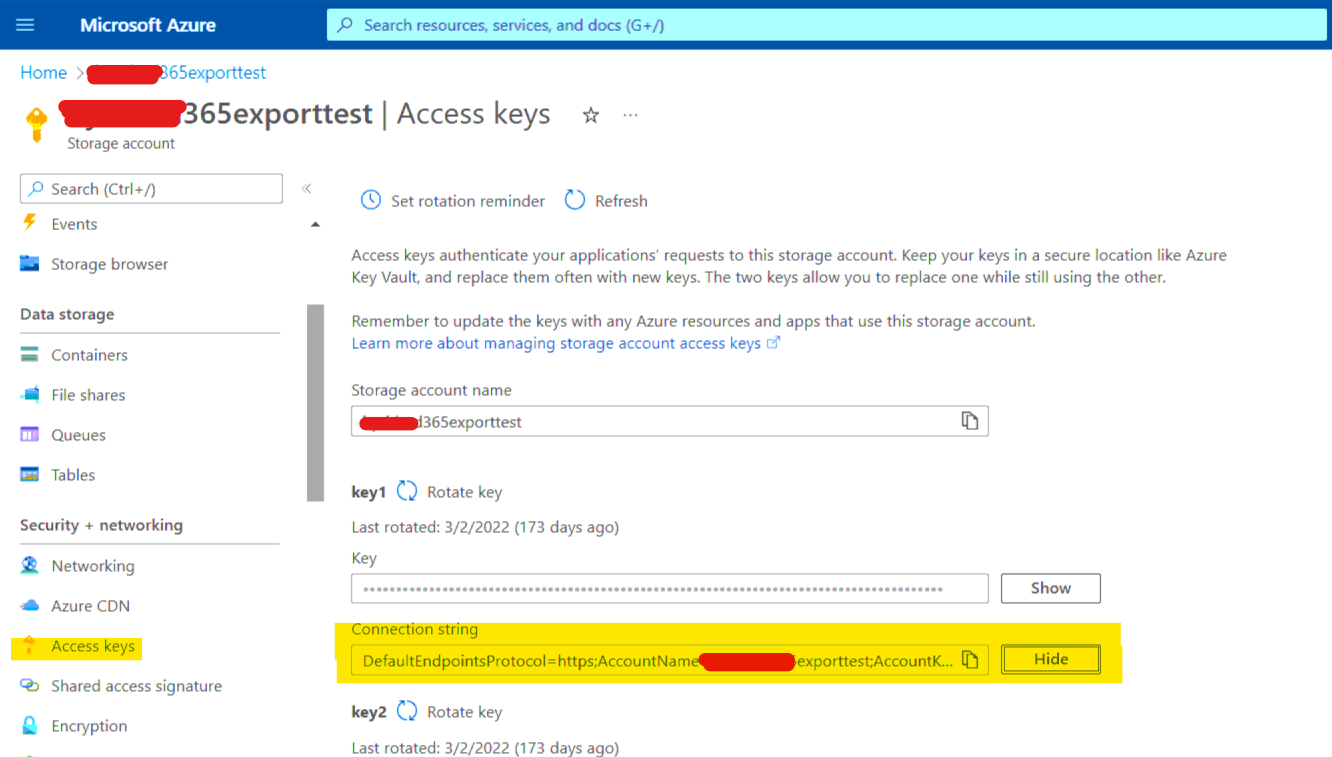

Step# 08

Navigate again to storage account list. Select your storage

account. Click on Access Keys under Security + Networking tab and

copy the Connection String

Paste this connection string in your d365 parameter form. And

paste your container name as well.

Now create a flow to call a class that actually does the import

job. In my case I used a menu item that called a controller class which uses

the service class that contain further code.

We will use the namespace like this.

using BlobStorage = Microsoft.WindowsAzure.Storage;

Now we will initialize some variables that will be used to get our

storage account, container, blobs.

// it creates a logical representation for client-side

BlobStorage.Blob.CloudBlobClient blobClient;

// stores the container we are fetching file from

BlobStorage.Blob.CloudBlobContainer blobContainer;

// stores the storage account that is being used

BlobStorage.CloudStorageAccount storageAccount;

// specifies if any path is using to access file – source directory

BlobStorage.Blob.CloudBlobDirectory blobDirectory;

// specify path to next directory where file to be stored after importing – destination directory

BlobStorage.Blob.CloudBlobDirectory blobDestinationDirectory;

// specify name of the file to be fetched for import

BlobStorage.Blob.CloudBlockBlob listBlob;

// specify name of the file to be uploaded after import process

BlobStorage.Blob.CloudBlockBlob destinationBlobs;

// required to get the file name from URL

container fileContainer;

//table that stores the connection string and container name

BYRIntegrationParameters integrationParameters;

System.Collections.IEnumerable istEnumerable;

System.Collections.IEnumerator istEnumerator;

//specifies type of blob. It can be file, folder, png, jpeg etc

BlobStorage.Blob.IListBlobItem item;

System.IO.Stream memory;

Next is to fill these variables with appropriate classes and

libraries.

select firstonly ConnectionString, ContainerName from integrationParameters;

storageAccount = BlobStorage.CloudStorageAccount::Parse(integrationParameters.ConnectionString);

blobClient = storageAccount.CreateCloudBlobClient();

blobContainer = blobClient.GetContainerReference(integrationParameters.ContainerName);

// specifying the folder from which we require the file.

blobDirectory = blobContainer.GetDirectoryReference('unprocessed');

// storing all the files present in that directory in list

istEnumerable = blobDirectory.ListBlobs(false, 0, null, null);

istEnumerator = istEnumerable.GetEnumerator();

Now applying loop on list and saving all the files in listBlob

variable. Memory variable reads the listBlob file and send it for business

logic.

// fetching all the files present in azure blob storage and sending them to method which reads them.

while (istEnumerator.MoveNext())

{

item = istEnumerator.Current;

// checking if item is a file or not

if (item is BlobStorage.Blob.CloudBlockBlob)

{

BlobStorage.Blob.CloudBlockBlob blob = item; // blob contains URL

blob.FetchAttributes(null, null, null);

fileContainer = str2con(blob.Name, '/'); // breaking URL in chunks to get file name.

listBlob = blobDirectory.GetBlockBlobReference(conPeek(fileContainer, conLen(fileContainer)));

// conpeek(fileContainer, conLen(fileContainer)) gets last object of container that contains actual file name.

memory = listBlob.OpenRead(null, null, null);

this.readFileData(memory);

// getting the errors that occurred in the process.

Notes exceptionMessage = BYRIntegrationPostingManager::geterrorDetails();

// in case of errors, place the file in errored folder and upload a separate error log file in azure storage account

if (exceptionMessage != '')

{

blobDestinationDirectory = blobContainer.GetDirectoryReference('errored');

str errorfileName = 'Error Log - ' + conPeek(fileContainer, conLen(fileContainer));

BlobStorage.Blob.CloudBlockBlob errorBlob = blobDestinationDirectory.GetBlockBlobReference(errorfileName);

errorBlob.UploadText(exceptionMessage, null, null, null, null);

}

// in case of successful import, place the file in archived folder

else

{

blobDestinationDirectory = blobContainer.GetDirectoryReference('archived');

}

destinationBlobs = blobDestinationDirectory.GetBlockBlobReference(conPeek(fileContainer, conLen(fileContainer)));

destinationBlobs.UploadFromStream(memory, null, null, null);

// delete file from unprocessed folder

listBlob.Delete(0,null, null, null);

}

overall your class would look like:

using BlobStorage = Microsoft.WindowsAzure.Storage;

/// <summary>

/// service class that actually imports the data from azure file and inserting into table

/// </summary>

class BYRIntegrationImportService

{

///<summary>

///method to import files from azure blob

///</summary>

public void importRecords()

{

// itcreates a logical representation for client-side

BlobStorage.Blob.CloudBlobClient blobClient;

// stores the container working on

BlobStorage.Blob.CloudBlobContainer blobContainer;

// stores the storage account that is being used

BlobStorage.CloudStorageAccount storageAccount;

// specifies if any path is using to access file

BlobStorage.Blob.CloudBlobDirectory blobDirectory;

// specify path to next directory where file to be stored after importing

BlobStorage.Blob.CloudBlobDirectory blobDestinationDirectory;

BlobStorage.Blob.CloudBlockBlob destinationBlobs;

container fileContainer;

BYRIntegrationParameters integrationParameters;

System.Collections.IEnumerable istEnumerable;

System.Collections.IEnumerator istEnumerator;

BlobStorage.Blob.IListBlobItem item;

BlobStorage.Blob.CloudBlockBlob listBlob;

System.IO.Stream memory;

select firstonly ConnectionString, ContainerName from integrationParameters;

storageAccount = BlobStorage.CloudStorageAccount::Parse(integrationParameters.ConnectionString);

blobClient = storageAccount.CreateCloudBlobClient();

blobContainer = blobClient.GetContainerReference(integrationParameters.ContainerName);

blobDirectory = blobContainer.GetDirectoryReference('unprocessed');

istEnumerable = blobDirectory.ListBlobs(false, 0, null, null);

istEnumerator = istEnumerable.GetEnumerator();

// fetching all the files present in azure blob storage and sending them to method which reads them.

while (istEnumerator.MoveNext())

{

item = istEnumerator.Current;

if (item is BlobStorage.Blob.CloudBlockBlob)

{

BlobStorage.Blob.CloudBlockBlob blob = item;

blob.FetchAttributes(null, null, null);

fileContainer = str2con(blob.Name, '/');

listBlob = blobDirectory.GetBlockBlobReference(conPeek(fileContainer, conLen(fileContainer)));

memory = listBlob.OpenRead(null, null, null);

this.readFileData(memory);

Notes exceptionMessage = BYRIntegrationPostingManager::geterrorDetails();

if (exceptionMessage != '')

{

blobDestinationDirectory = blobContainer.GetDirectoryReference('errored');

str errorfileName = 'Error Log - ' + conPeek(fileContainer, conLen(fileContainer));

BlobStorage.Blob.CloudBlockBlob errorBlob = blobDestinationDirectory.GetBlockBlobReference(errorfileName);

errorBlob.UploadText(exceptionMessage, null, null, null, null);

}

else

{

blobDestinationDirectory = blobContainer.GetDirectoryReference('archived');

}

destinationBlobs = blobDestinationDirectory.GetBlockBlobReference(conPeek(fileContainer, conLen(fileContainer)));

destinationBlobs.UploadFromStream(memory, null, null, null);

listBlob.Delete(0,null, null, null);

}

}

///<summary>

///method of reading file content and calling insert method

///</summary>

///<param name = "_filename">file from azure</param>

private void readFileData(System.IO.Stream _filename)

{

System.IO.StreamReader strings = new System.IO.StreamReader(_filename);

str lineStr;

container con;

_filename.Position = 0;

while(strings.Peek() >= 0 )

{

lineStr = strings.ReadLine();

con = str2con(lineStr,'|');

byrHylPostingTable.clear();

//business logic

}

}

This is how one can import file from azure blob storage and process the files, and exporting the file as well.

Comments

Post a Comment